It's just a bit of small talk, but I started using the Arc browser recently instead of Google Chrome. I think it's splendid. One of the things I like about it is that it supports Chrome extensions, which allows me to use the Vimium extension. Unlike Google Chrome, which tends to get cluttered with lots of tabs or separate windows, Arc keeps everything contained in a single window.

By default, Arc automatically archives inactive tabs after 12 hours to keep the interface clean. However, I would prefer if the tabs closed after about 2-3 days. Unfortunately, this setting can’t be changed at the moment. Anyway, I'll stick with it for a while.

Goroutines Equivalent

Let's cut to the chase. I've been curious about which performs better, goroutines or Rust using the may library. Now, I plan to benchmark it and share my insights in this article.

But wait, what is "may"?

I found this post on Reddit, and it mentions may:

There is also "may" which attempts to be a Rust version of goroutines. I have not used it though, so can't comment on anything further about it.

README explains it like this:

May is a high-performance library for programming stackful coroutines with which you can easily develop and maintain massive concurrent programs.

That is to say, goroutines might just may be considered equivalent to the may library in Rust.

Actually, may was new to me until I saw that comment.

There are numerous comparison articles on this topic,

particularly comparing goroutines with tokio in Rust.

However, I couldn't find any good results about may, so I decided to write the article myself.

Additionally, tokio indicates:

If your code is CPU-bound and you wish to limit the number of threads used to run it, you should use a separate thread pool dedicated to CPU bound tasks. For example, you could consider using the rayon library for CPU-bound tasks.

In this case, while tokio excels at I⁄O-bound tasks, I chose may over tokio because I assume it is better suited for CPU-bound tasks, but having said that,

I'm also curious about the comparison between tokio and goroutines.

Just to be clear upfront, this is not a comparison between Rust and Golang here, but rather a comparison between the may library and goroutines.

What I can say for sure is that it may be somewhat unfair to compare them.

Preparing Benchmark

Machine Specs

- OS: Sonoma 14.6

- CPU: Apple M3 Pro (11 cores)

- Memory: 36GB

Installing Rust

% curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

% cargo --version

cargo 1.80.0 (376290515 2024-07-16)

Installing Go

Sure, we can also use brew.

% brew install go

% go version

go version go1.22.3 darwin/arm64

Alright, with this, the environment installation is complete.

Heavy CPU-bound Task

In my concept, the heavy task involves using a dictionary file to find the password for a password-protected zip file, a process known as a brute-force attack.

I downloaded the password dictionary file containing over 5 million passwords for testing from SecLists.

curl -O https://raw.githubusercontent.com/danielmiessler/SecLists/master/Passwords/xato-net-10-million-passwords.txt

I applied a randomly extracted password from the dictionary to the zip file.

echo "This is a text file." > plain.txt

zip -P $(shuf -n 1 xato-net-10-million-passwords.txt | tee /dev/tty) target.zip plain.txt

I executed the password search 10 times using this source code and then calculated the average processing time.

Result

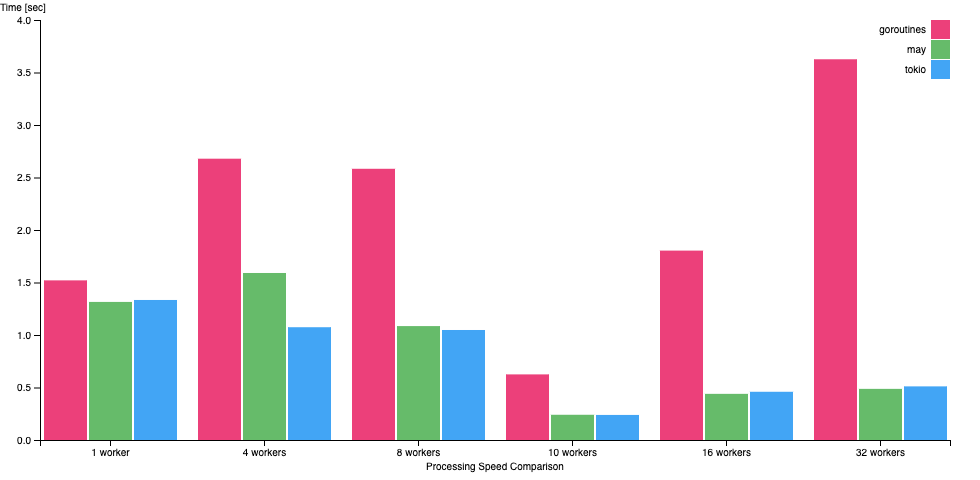

Let's review the chart. It shows the number of workers on the horizontal axis and the vertical axis represents processing time, where lower values indicate faster processing.

As per the chart, using 10 workers results in optimal performance.

Also, tokio performs just as fast as the may.

Given the 11 cores in the M3 Pro chip on my MacBook, as indicated by the sysctl below, it makes sense that using 10 workers gives the fastest results.

% sysctl -n hw.ncpu

11

Remarkably, with goroutines, 4-8 workers are actually slower than just 1 worker. One possible reason for this could be the increased cost of context switching in the Go runtime. Head over to this post by "Golang By Example" for a more comprehensive understanding of Go's scheduler.

Until next time, happy coding and have fun with benchmarks!

We’re looking for talents eager to innovate and grow with us. Check out our company page on LinkedIn!